Can machines understand how we feel? Exploring AI and emotional intelligence

“How are you feeling today?”

It’s a simple question that humans ask each other all the time. But what happens when your phone, car, or web browser asks the same? Does it understand your answer, or is it just mimicking empathy?

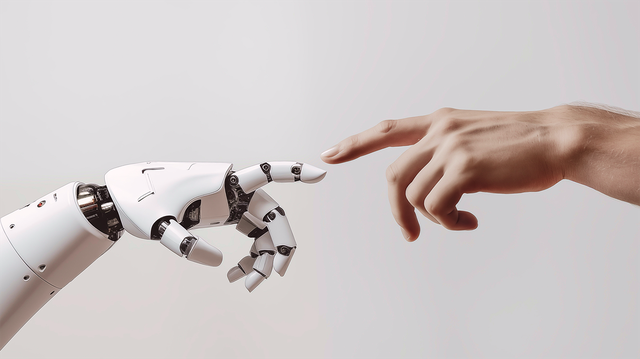

This is the promise and puzzle of emotional intelligence in machines. In a world where we interact with technology constantly, the idea that devices could recognize, interpret, or even respond to human emotions is no longer science fiction. It’s already in motion. But how far can it really go? And should it?

What emotional intelligence means for machines

Emotional intelligence in humans is the ability to perceive, understand, manage, and respond to emotions—both our own and others’. It’s part of what makes us socially aware and empathetic.

When applied to artificial intelligence, emotional intelligence typically refers to a machine’s capacity to detect human emotions through data such as facial expressions, voice tone, word choice, or physiological signals. It then responds in a way that appears appropriate.

This field is often called affective computing. It does not mean the machine feels anything. It means it can analyze how we feel and react accordingly.

Emotional AI isn’t about creating machines with feelings. It’s about designing systems that can navigate our feelings.

How AI reads human emotions

AI systems learn to interpret emotions using a combination of sensors, data processing, and machine learning models trained on large emotional datasets.

Here are some primary methods machines use to read human emotions:

Facial recognition

AI can scan facial microexpressions to detect emotions such as anger, surprise, or sadness. This is often used in security and marketing.

Voice analysis

By examining vocal elements like pitch, speed, and intensity, AI systems can infer whether a person is stressed, calm, happy, or frustrated.

Text and language cues

Natural language processing (NLP) helps AI interpret emotion based on word choice, sentence structure, and even emojis. Sentiment analysis is commonly used in customer service.

Biometric signals

In experimental settings, wearables can measure heart rate, skin conductivity, or eye movement to signal emotional states.

Each method has strengths and limitations. Used together, they offer a fuller emotional picture, though the results are far from perfect.

Where emotional AI is already at work

Emotional AI is already part of many tools and services in everyday life.

Virtual assistants

Assistants like Siri, Alexa, and Google Assistant are not yet emotionally intelligent, but companies are working on making them more emotionally aware. Alexa, for example, can detect frustration in a user’s voice and adjust its tone. Google Assistant may vary its tone based on context.

Customer support chatbots

Many chatbots use sentiment analysis to assess customer tone. If a customer appears angry, the system may escalate the issue to a human representative. Tools like Zendesk and LivePerson include this capability.

Mental health apps

Apps such as Wysa and Woebot use conversational AI for mood check-ins and guided exercises based on cognitive behavioral therapy. They rely on language analysis to tailor responses sensitively.

Driver monitoring systems

Some car manufacturers, including Tesla and BMW, are testing AI that monitors driver fatigue or stress using cameras and sensors. These systems may alert the driver or change settings to improve safety.

Advertising and market research

Brands use facial coding and sentiment analysis to gauge emotional reactions to ads or products. Companies like Affectiva and Realeyes offer this technology for real-time feedback.

What it gets wrong and why that matters

Despite advancements, AI’s emotional intelligence has clear limits. Human emotion is complex, culturally specific, and context-dependent. Machines still struggle with subtlety.

Consider sarcasm. If someone says, “Great, another meeting,” with an eye roll and sigh, humans recognize the frustration. AI often interprets the literal text as positive.

Bias in training datasets is another issue. Many datasets lack demographic diversity, which can lead to inaccurate emotional readings for people from different backgrounds or with neurodiverse traits.

Incorrect readings can cause real harm. An AI tool might misinterpret a student’s facial expression and incorrectly mark them as disengaged. Or a hiring algorithm could read nervousness as disinterest.

These risks show why emotional AI must be deployed cautiously and transparently.

Should machines respond to our emotions?

If a machine senses we’re upset, what should it do?

This is not only a technical issue. It’s an ethical one.

For example, if a browser detects anxiety, should it modify how it shows search results? If a child’s smartwatch senses distress, should it alert a parent?

While these features could be helpful, they also raise privacy concerns. How much emotional data are we willing to share? Who owns that data? What happens if companies—or governments—use it to influence our choices?

The boundary between personalization and manipulation can be very thin.

Opera’s approach to user-centric innovation

As emotional AI evolves, the products that respect privacy, transparency, and choice will stand out.

Opera has long prioritized user autonomy. It was the first major browser to offer a built-in VPN, ad blocker, and crypto wallet. These are not gimmicks—they’re tools that give users control.

The browser continues to evolve in ways that respond to user context, not emotion. For instance, the upgraded sidebar now allows users to pin workspaces or mute tabs when focus is needed.

In the future, emotional context may influence how people engage with web content. If that happens, Opera’s approach will remain consistent: features must be optional, respectful, and clear. They will not guess your feelings without consent.

What we should take away from this

Can machines understand how we feel? They can approximate it. They can identify signals, detect patterns, and respond using emotion-based models.

But they do not feel. Their understanding is surface-level at best.

Emotional AI can still be useful—especially in fields like accessibility, mental health, and safety—if applied with care. The goal is not to make machines empathetic. It’s to design systems that support human emotion without exploiting it.

As with any powerful tool, the key question is not what technology can do. It’s how we choose to use it.

That choice still belongs to us. And it matters.

Related articles

You deserve a better browser

Press Contacts!

Our press team loves working with journalists from around the world. If you’re a member of the media and would like to talk, please get in touch with us by sending an email to one of our team members or to press-team@opera.com